Some Sketches Of The Wild Raven While He Is Still In The Park, He Is A Joy To Sit With And Draw. Even

Some sketches of the wild Raven while he is still in the park, he is a joy to sit with and draw. Even if he tries to steal my sketchbook at every opportunity.

More Posts from Ourvioletdeath and Others

❤❤❤

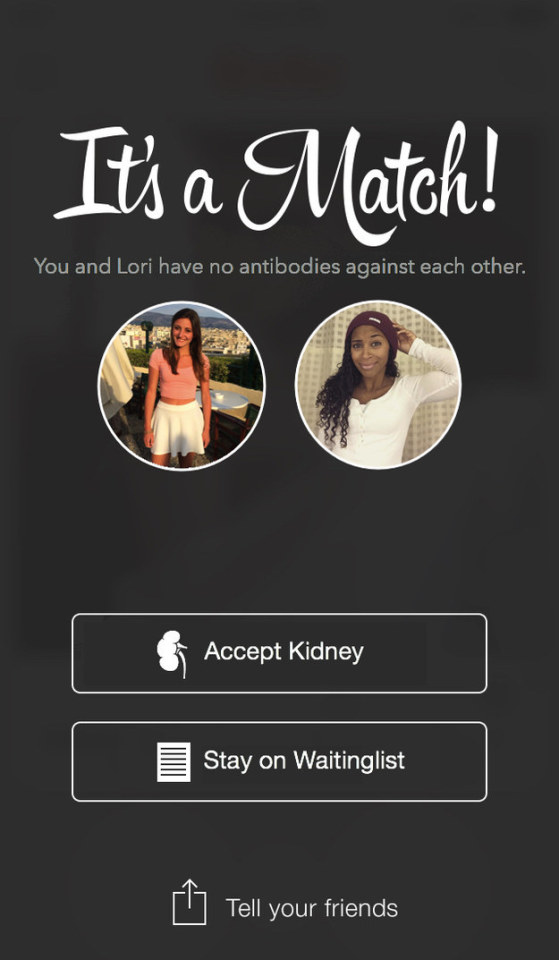

Woman Surprise Her Girlfriend With The News She Will Be Her Kidney Donor - Watch the full video

is it possible to study science/medicine/research and pursue that as a career, even if you've majored in a humanity for undergrad and really have no prior work experience in the research field?

Yes and no.

You can go to medical school with no research experience (I did) but you will have to have the basic science pre-med background. You can major in whatever you want, though.

To pursue a scientific career you’re going to have to have some sort of STEM background and training, whether it’s technical school or bachelors/master’s level education. It’s hard to know what the requirements are without knowing more specifically what type of job you are thinking of.

Careers in scientific research are very competitive, actually. There is huge pressure to publish and there are fights for grant funds and university positions. You could work as a lab assistant in some cases with on-the-job training, but in most cases you’re going to need a pretty solid STEM background if you are going to design or run experiments. You have to have learned the basic lab techniques and the science behind your research to be able to actually do the research.

In all these cases, even with a degree in the humanities, you can go back to school and bolster your science credentials, but going in with no experience is going to be tough.

Just thought you should know I was browsing Archive Of Our Own and came across an actual fanfic about PIE. The fandom was listed as "languages (anthropomorphic)" and it had pairings such as "Mycenaean Greek/Minoan", "Gothic/Gaulic Latin" and "Pregermanic/Maglemosian". I just about died.

oh my god???!?

Language is Learned in Brain Circuits that Predate Humans

It has often been claimed that humans learn language using brain components that are specifically dedicated to this purpose. Now, new evidence strongly suggests that language is in fact learned in brain systems that are also used for many other purposes and even pre-existed humans, say researchers in PNAS.

The research combines results from multiple studies involving a total of 665 participants. It shows that children learn their native language and adults learn foreign languages in evolutionarily ancient brain circuits that also are used for tasks as diverse as remembering a shopping list and learning to drive.

“Our conclusion that language is learned in such ancient general-purpose systems contrasts with the long-standing theory that language depends on innately-specified language modules found only in humans,” says the study’s senior investigator, Michael T. Ullman, PhD, professor of neuroscience at Georgetown University School of Medicine.

“These brain systems are also found in animals — for example, rats use them when they learn to navigate a maze,” says co-author Phillip Hamrick, PhD, of Kent State University. “Whatever changes these systems might have undergone to support language, the fact that they play an important role in this critical human ability is quite remarkable.”

The study has important implications not only for understanding the biology and evolution of language and how it is learned, but also for how language learning can be improved, both for people learning a foreign language and for those with language disorders such as autism, dyslexia, or aphasia (language problems caused by brain damage such as stroke).

The research statistically synthesized findings from 16 studies that examined language learning in two well-studied brain systems: declarative and procedural memory.

The results showed that how good we are at remembering the words of a language correlates with how good we are at learning in declarative memory, which we use to memorize shopping lists or to remember the bus driver’s face or what we ate for dinner last night.

Grammar abilities, which allow us to combine words into sentences according to the rules of a language, showed a different pattern. The grammar abilities of children acquiring their native language correlated most strongly with learning in procedural memory, which we use to learn tasks such as driving, riding a bicycle, or playing a musical instrument. In adults learning a foreign language, however, grammar correlated with declarative memory at earlier stages of language learning, but with procedural memory at later stages.

The correlations were large, and were found consistently across languages (e.g., English, French, Finnish, and Japanese) and tasks (e.g., reading, listening, and speaking tasks), suggesting that the links between language and the brain systems are robust and reliable.

The findings have broad research, educational, and clinical implications, says co-author Jarrad Lum, PhD, of Deakin University in Australia.

“Researchers still know very little about the genetic and biological bases of language learning, and the new findings may lead to advances in these areas,” says Ullman. “We know much more about the genetics and biology of the brain systems than about these same aspects of language learning. Since our results suggest that language learning depends on the brain systems, the genetics, biology, and learning mechanisms of these systems may very well also hold for language.”

For example, though researchers know little about which genes underlie language, numerous genes playing particular roles in the two brain systems have been identified. The findings from this new study suggest that these genes may also play similar roles in language. Along the same lines, the evolution of these brain systems, and how they came to underlie language, should shed light on the evolution of language.

Additionally, the findings may lead to approaches that could improve foreign language learning and language problems in disorders, Ullman says.

For example, various pharmacological agents (e.g., the drug memantine) and behavioral strategies (e.g., spacing out the presentation of information) have been shown to enhance learning or retention of information in the brain systems, he says. These approaches may thus also be used to facilitate language learning, including in disorders such as aphasia, dyslexia, and autism.

“We hope and believe that this study will lead to exciting advances in our understanding of language, and in how both second language learning and language problems can be improved,” Ullman concludes.

No specific external funding supported the work. The authors report having no personal financial interests related to the study.

Researchers at King’s College London found that the drug Tideglusib stimulates the stem cells contained in the pulp of teeth so that they generate new dentine – the mineralised material under the enamel.

Teeth already have the capability of regenerating dentine if the pulp inside the tooth becomes exposed through a trauma or infection, but can only naturally make a very thin layer, and not enough to fill the deep cavities caused by tooth decay.

But Tideglusib switches off an enzyme called GSK-3 which prevents dentine from carrying on forming.

Scientists showed it is possible to soak a small biodegradable sponge with the drug and insert it into a cavity, where it triggers the growth of dentine and repairs the damage within six weeks.

The tiny sponges are made out of collagen so they melt away over time, leaving only the repaired tooth.

Rinat Voligamsi (Russian, b. 1968), A Cluster of Houses above the Woods, 2017. Oil on canvas, 120 x 120 cm.

YO.

You’re a hit man with a conscience - before every kill, you help the victim check something off their bucket list.

Peering into neural networks

Neural networks, which learn to perform computational tasks by analyzing large sets of training data, are responsible for today’s best-performing artificial intelligence systems, from speech recognition systems, to automatic translators, to self-driving cars.

But neural nets are black boxes. Once they’ve been trained, even their designers rarely have any idea what they’re doing — what data elements they’re processing and how.

Two years ago, a team of computer-vision researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) described a method for peering into the black box of a neural net trained to identify visual scenes. The method provided some interesting insights, but it required data to be sent to human reviewers recruited through Amazon’s Mechanical Turk crowdsourcing service.

At this year’s Computer Vision and Pattern Recognition conference, CSAIL researchers presented a fully automated version of the same system. Where the previous paper reported the analysis of one type of neural network trained to perform one task, the new paper reports the analysis of four types of neural networks trained to perform more than 20 tasks, including recognizing scenes and objects, colorizing grey images, and solving puzzles. Some of the new networks are so large that analyzing any one of them would have been cost-prohibitive under the old method.

The researchers also conducted several sets of experiments on their networks that not only shed light on the nature of several computer-vision and computational-photography algorithms, but could also provide some evidence about the organization of the human brain.

Neural networks are so called because they loosely resemble the human nervous system, with large numbers of fairly simple but densely connected information-processing “nodes.” Like neurons, a neural net’s nodes receive information signals from their neighbors and then either “fire” — emitting their own signals — or don’t. And as with neurons, the strength of a node’s firing response can vary.

In both the new paper and the earlier one, the MIT researchers doctored neural networks trained to perform computer vision tasks so that they disclosed the strength with which individual nodes fired in response to different input images. Then they selected the 10 input images that provoked the strongest response from each node.

In the earlier paper, the researchers sent the images to workers recruited through Mechanical Turk, who were asked to identify what the images had in common. In the new paper, they use a computer system instead.

“We catalogued 1,100 visual concepts — things like the color green, or a swirly texture, or wood material, or a human face, or a bicycle wheel, or a snowy mountaintop,” says David Bau, an MIT graduate student in electrical engineering and computer science and one of the paper’s two first authors. “We drew on several data sets that other people had developed, and merged them into a broadly and densely labeled data set of visual concepts. It’s got many, many labels, and for each label we know which pixels in which image correspond to that label.”

The paper’s other authors are Bolei Zhou, co-first author and fellow graduate student; Antonio Torralba, MIT professor of electrical engineering and computer science; Aude Oliva, CSAIL principal research scientist; and Aditya Khosla, who earned his PhD as a member of Torralba’s group and is now the chief technology officer of the medical-computing company PathAI.

The researchers also knew which pixels of which images corresponded to a given network node’s strongest responses. Today’s neural nets are organized into layers. Data are fed into the lowest layer, which processes them and passes them to the next layer, and so on. With visual data, the input images are broken into small chunks, and each chunk is fed to a separate input node.

For every strong response from a high-level node in one of their networks, the researchers could trace back the firing patterns that led to it, and thus identify the specific image pixels it was responding to. Because their system could frequently identify labels that corresponded to the precise pixel clusters that provoked a strong response from a given node, it could characterize the node’s behavior with great specificity.

The researchers organized the visual concepts in their database into a hierarchy. Each level of the hierarchy incorporates concepts from the level below, beginning with colors and working upward through textures, materials, parts, objects, and scenes. Typically, lower layers of a neural network would fire in response to simpler visual properties — such as colors and textures — and higher layers would fire in response to more complex properties.

But the hierarchy also allowed the researchers to quantify the emphasis that networks trained to perform different tasks placed on different visual properties. For instance, a network trained to colorize black-and-white images devoted a large majority of its nodes to recognizing textures. Another network, when trained to track objects across several frames of video, devoted a higher percentage of its nodes to scene recognition than it did when trained to recognize scenes; in that case, many of its nodes were in fact dedicated to object detection.

One of the researchers’ experiments could conceivably shed light on a vexed question in neuroscience. Research involving human subjects with electrodes implanted in their brains to control severe neurological disorders has seemed to suggest that individual neurons in the brain fire in response to specific visual stimuli. This hypothesis, originally called the grandmother-neuron hypothesis, is more familiar to a recent generation of neuroscientists as the Jennifer-Aniston-neuron hypothesis, after the discovery that several neurological patients had neurons that appeared to respond only to depictions of particular Hollywood celebrities.

Many neuroscientists dispute this interpretation. They argue that shifting constellations of neurons, rather than individual neurons, anchor sensory discriminations in the brain. Thus, the so-called Jennifer Aniston neuron is merely one of many neurons that collectively fire in response to images of Jennifer Aniston. And it’s probably part of many other constellations that fire in response to stimuli that haven’t been tested yet.

Because their new analytic technique is fully automated, the MIT researchers were able to test whether something similar takes place in a neural network trained to recognize visual scenes. In addition to identifying individual network nodes that were tuned to particular visual concepts, they also considered randomly selected combinations of nodes. Combinations of nodes, however, picked out far fewer visual concepts than individual nodes did — roughly 80 percent fewer.

“To my eye, this is suggesting that neural networks are actually trying to approximate getting a grandmother neuron,” Bau says. “They’re not trying to just smear the idea of grandmother all over the place. They’re trying to assign it to a neuron. It’s this interesting hint of this structure that most people don’t believe is that simple.”

The Ancient Dragon had awoken, looked over the terrified villagers… and immediately started gushing about how cute they were.

Isn’t it funny how on TV : 1) getting shot in the shoulder is always treated like it’s a minor flesh wound. It’s not like you’ve probably sustained serious or even permanent damage to your arm, or even gone through your lung…nope. 2) People are always dying of some vague infectious disease that’s only hinted at. I mean, it’s probably TB, but still, it’d be nice to get some closure. 3) People catching something after a walk in the rain and then dying. 4) Cardiac arrest is like, totally NBD. A couple of (really badly performed) pumps on the chest, and the victim is up and talking as if they didn’t just drop dead. Like, if you’ve really just arrested, you’ve probably got 5 broken ribs, an ET tube in your throat and you’ve just earned yourself a ticket to ITU.

-

ashes-to-asher liked this · 11 months ago

ashes-to-asher liked this · 11 months ago -

molecular-despot liked this · 1 year ago

molecular-despot liked this · 1 year ago -

littlebleubirb reblogged this · 1 year ago

littlebleubirb reblogged this · 1 year ago -

littlebleubirb liked this · 1 year ago

littlebleubirb liked this · 1 year ago -

littleblueducktales liked this · 2 years ago

littleblueducktales liked this · 2 years ago -

p-art-ying reblogged this · 2 years ago

p-art-ying reblogged this · 2 years ago -

glitch-core liked this · 2 years ago

glitch-core liked this · 2 years ago -

soaringvulture reblogged this · 2 years ago

soaringvulture reblogged this · 2 years ago -

goose-on-the-loose liked this · 2 years ago

goose-on-the-loose liked this · 2 years ago -

selkiebycatch liked this · 2 years ago

selkiebycatch liked this · 2 years ago -

whoisthatmovinginthedark liked this · 2 years ago

whoisthatmovinginthedark liked this · 2 years ago -

natandacat liked this · 2 years ago

natandacat liked this · 2 years ago -

leatherbookmark reblogged this · 2 years ago

leatherbookmark reblogged this · 2 years ago -

lordofshades liked this · 2 years ago

lordofshades liked this · 2 years ago -

thelostcanyon liked this · 2 years ago

thelostcanyon liked this · 2 years ago -

zmora4456 liked this · 2 years ago

zmora4456 liked this · 2 years ago -

seanbeanslefttoe liked this · 3 years ago

seanbeanslefttoe liked this · 3 years ago -

certifieditsbo liked this · 3 years ago

certifieditsbo liked this · 3 years ago -

ruby-seadragon liked this · 3 years ago

ruby-seadragon liked this · 3 years ago -

rufuslupislupis liked this · 3 years ago

rufuslupislupis liked this · 3 years ago -

ruthlesslistener liked this · 3 years ago

ruthlesslistener liked this · 3 years ago -

ouigi liked this · 3 years ago

ouigi liked this · 3 years ago -

maldwynicecrow reblogged this · 3 years ago

maldwynicecrow reblogged this · 3 years ago -

laserkatz liked this · 3 years ago

laserkatz liked this · 3 years ago -

lesbianraggedyanne liked this · 4 years ago

lesbianraggedyanne liked this · 4 years ago -

pajaritocantando liked this · 4 years ago

pajaritocantando liked this · 4 years ago -

nunyo-bizznez liked this · 4 years ago

nunyo-bizznez liked this · 4 years ago -

shiranui7 liked this · 4 years ago

shiranui7 liked this · 4 years ago -

maldwynicecrow liked this · 4 years ago

maldwynicecrow liked this · 4 years ago -

texas-toadhouse liked this · 4 years ago

texas-toadhouse liked this · 4 years ago -

thatforestprince liked this · 4 years ago

thatforestprince liked this · 4 years ago -

soren-rave liked this · 4 years ago

soren-rave liked this · 4 years ago -

cassettemoon liked this · 4 years ago

cassettemoon liked this · 4 years ago -

scentofsubtext reblogged this · 4 years ago

scentofsubtext reblogged this · 4 years ago -

scentofsubtext liked this · 4 years ago

scentofsubtext liked this · 4 years ago -

j-liz liked this · 4 years ago

j-liz liked this · 4 years ago -

ethreain-the-great-lich liked this · 4 years ago

ethreain-the-great-lich liked this · 4 years ago -

wavefailguy liked this · 4 years ago

wavefailguy liked this · 4 years ago -

astrila reblogged this · 4 years ago

astrila reblogged this · 4 years ago -

tsukiyas reblogged this · 4 years ago

tsukiyas reblogged this · 4 years ago -

propheticdownpour liked this · 4 years ago

propheticdownpour liked this · 4 years ago -

ashes-of-twilight liked this · 4 years ago

ashes-of-twilight liked this · 4 years ago -

seadramonster reblogged this · 4 years ago

seadramonster reblogged this · 4 years ago -

camoherb liked this · 4 years ago

camoherb liked this · 4 years ago -

horvythegay liked this · 4 years ago

horvythegay liked this · 4 years ago -

tsukiyas liked this · 4 years ago

tsukiyas liked this · 4 years ago -

challenging-wonderland reblogged this · 4 years ago

challenging-wonderland reblogged this · 4 years ago -

challenging-wonderland liked this · 4 years ago

challenging-wonderland liked this · 4 years ago